Meta's Google Partnership Rocks Nvidia's AI Hardware Throne

The artificial intelligence infrastructure world just witnessed a massive earthquake. Nvidia, long considered the undisputed king of AI hardware thanks to its powerful Graphics Processing Units (GPUs), suddenly lost billions in market value. This dramatic shift happened after reports revealed that Meta, the parent company of Facebook, Instagram, and WhatsApp, is teaming up with Google. The partnership aims to train Meta's next-generation Llama AI models using Google's specialized Tensor Processing Units (TPUs).

Nvidia's GPU Shortage Opens Door for Competitors

For years, Nvidia's GPUs have been the gold standard. Every major tech giant and ambitious startup ordered these specialized chips in bulk. This insatiable demand created a global shortage. The supply gap presented a golden opportunity for other players. Companies like AMD stepped in to fill the void. Google also seized the moment, offering its own custom AI chips to tech companies for model training.

Meta's strategic pivot away from Nvidia highlights a critical vulnerability. It signals that the AI hardware market is maturing rapidly. The era of a "one-size-fits-all" chip solution is ending. Companies now realize that strategic hardware choices can save both significant money and precious time. To understand why Meta would look beyond Nvidia, and why Nvidia would spend a staggering $20 billion to acquire startup Groq, we need to examine the core technology.

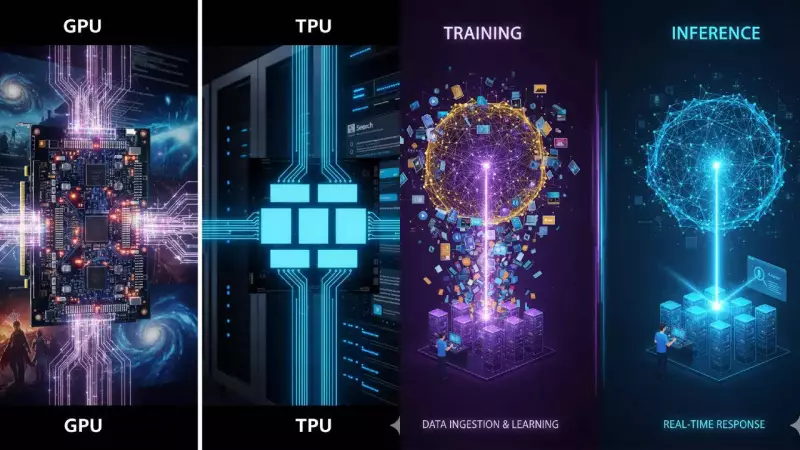

Dissecting the AI Chip Trio: GPU, TPU, and LPU

Part I: Nvidia GPUs – The Versatile Workhorse

Nvidia did not originally design GPUs for artificial intelligence. Their primary purpose was rendering stunning 3D graphics for video games. Creating realistic explosions or subtle light effects required immense computational power. GPUs excelled at handling thousands of pixel calculations simultaneously. They work alongside Central Processing Units (CPUs), tackling complex tasks in rapid sequence.

Researchers made a crucial discovery. The mathematical operations needed for game graphics were identical to those required for training neural networks. Nvidia capitalized brilliantly by developing CUDA. This software layer allowed developers to repurpose graphics cards for general computing. As AI demands exploded, Nvidia's chips, like the powerful H100, became essential. They now train massive Large Language Models, mine cryptocurrency, and accelerate drug discovery.

Part II: Google TPUs – The Specialized Speed Demon

Google took a fundamentally different path. By 2015, the company's heavy reliance on AI for Search, Photos, and Translate demanded more power and speed. Expanding data centers was not enough. Google decided to build its own chip from the ground up: the Tensor Processing Unit (TPU).

A TPU is an Application-Specific Integrated Circuit (ASIC). It executes particular tasks at incredible speeds. Consider using Google Translate at a train station in Japan. You need an accurate translation delivered instantly. A slow response could make you miss your train. This scenario underscores the need for "instant" AI. TPUs are engineered specifically for this, accelerating tensor mathematics with remarkable efficiency.

Their architecture is unique. Unlike GPUs and CPUs that constantly fetch data from memory, TPUs process data in a continuous flow. This design reduces electricity consumption and boosts speed for specific operations. Furthermore, you cannot purchase a TPU outright. Google offers them exclusively as a service through its Google Cloud Platform.

Part III: Training vs. Inference – The AI Lifecycle

Understanding the need for different chips requires grasping two key AI stages: Training and Inference.

Training: This is the AI's university phase. The model ingests trillions of words, images, and videos to learn patterns and connections. It is computationally monstrous and can take months. Nvidia GPUs dominate here because training involves extensive experimentation. Their flexibility is ideal, much like reading various textbooks on the same subject. Major labs, including OpenAI and Google itself, have relied on Nvidia GPUs for training.

Inference: This is when the AI goes to work. When you type a prompt into Gemini and get an answer, that's inference. The model applies its learned knowledge to produce results, akin to a practising doctor using medical training. While GPUs aid learning, TPUs offer superior speed (low latency) and cost-efficiency for inference. This explains the industry's shift towards a mixed hardware strategy.

Meta's move makes perfect sense in this context. As Meta integrates Llama AI into its products, it is transitioning from a pure training phase to a heavy inference phase, where speed is paramount.

Part IV: The LPU Emerges and Nvidia's $20 Billion Gambit

Shortly after Meta's announcement, Nvidia made a colossal strategic move. It acquired startup Groq for approximately $20 billion. Groq's prize is the Language Processing Unit (LPU), invented by former Google TPU contributor Jonathan Ross.

Groq describes the LPU as a new processor category built from scratch for AI. It promises to run Large Language Models at substantially faster speeds. Architecturally, LPUs can be up to ten times more energy-efficient than GPUs for inference tasks.

This acquisition transforms Nvidia's offering. It can now provide GPUs for the training phase and LPUs for the inference phase, where speed is everything. Nvidia evolves from a "GPU company" into a "Total Compute Company." The move directly counters the threat from Google's TPUs. If a customer complains about slow GPU inference, Nvidia can now offer its own high-speed LPU solution.

Part V: The Stubborn Software Hurdle

A critical question remains. If TPUs are so efficient, why hasn't everyone switched already? The answer lies in software. Nvidia's greatest asset is its CUDA software ecosystem. Developers use CUDA to communicate with GPUs through millions of lines of code and specialized tools. Switching from a GPU to a TPU isn't like changing smartphones. It's akin to learning a new language from scratch. It requires rewriting code and changing frameworks.

However, this barrier is slowly crumbling. Google Cloud CEO Thomas Kurian recently stated the company is actively working to make AI models more portable. The goal is to write code once and run it on any chip. The battle is shifting from raw power to a trifecta of efficiency, speed, and specialization.

Part VI: The Rising Importance of High-Speed Memory

As AI services reach millions of users, tech companies face new challenges. They must deliver fast responses to vast numbers of people simultaneously. This requires more TPUs and LPUs, but also highlights the need for advanced memory. High Bandwidth Memory (HBM) is becoming crucial for the next generation of computing.

Large Language Models need massive data streams to flow uninterrupted through processors. HBM's superior design ensures that GPUs and AI accelerators never wait for data. This significantly cuts training times and reduces latency during real-time inference. Beyond speed, HBM offers better thermal and energy profiles. Industry leaders like Nvidia and AMD are already standardizing HBM in their flagship AI chips for high-performance computing.

Conclusion: A New Era of Specialized AI Hardware

The emergence of specialized chips for distinct tasks marks the evolution of the AI industry. As the market matures, tech companies will increasingly use a combination of chips. This hybrid approach promises greater efficiency, cost-effectiveness, and low-latency performance.

GPUs will remain the workhorses for training, building sophisticated models at trillion-parameter scales. Specialized chips like TPUs and LPUs will handle the daily grind of serving AI to users worldwide. The landscape is no longer dominated by a single king. It is becoming a diverse and competitive ecosystem where the right tool is chosen for the right job.