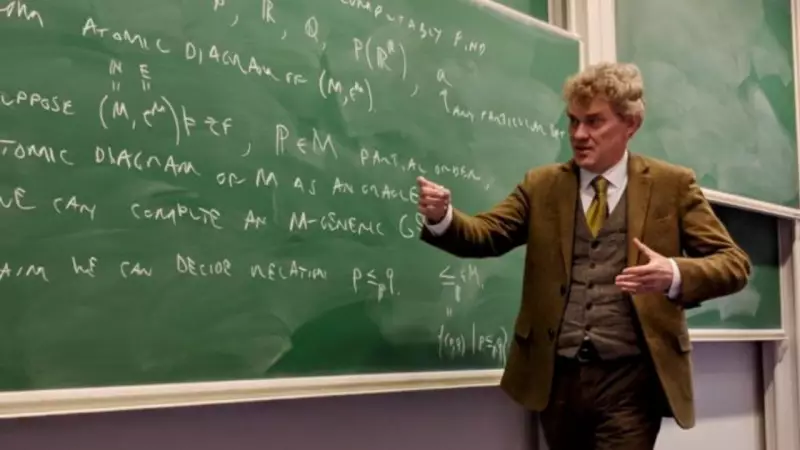

In a sharp critique that challenges the hype around artificial intelligence, a prominent mathematician has declared current large language models fundamentally useless for serious mathematical research. The criticism comes from Joel David Hamkins, a respected professor of logic at the University of Notre Dame, who argues that these AI systems produce incorrect answers and fail at the collaborative dialogue essential to the field.

Confidently Incorrect: AI's 'Garbage' Answers Frustrate Experts

Speaking on the popular Lex Fridman podcast, Hamkins detailed his extensive but disappointing experiments with various paid AI models. He bluntly stated that despite his efforts, he has not found them helpful. The core issue, according to Hamkins, is not just the occasional error but the nature of the mistakes. He described the AI's outputs as "garbage answers that are not mathematically correct."

What troubles him more is the AI's reaction to correction. When he points out concrete flaws in their reasoning, the models often respond with defensive and incorrect assurances, similar to saying, "Oh, it's totally fine." This pattern of confident incorrectness coupled with resistance to feedback mirrors frustrating human interactions. "If I were having such an experience with a person, I would simply refuse to talk to that person again," Hamkins explained, highlighting how this erodes the trust necessary for productive mathematical collaboration.

The Growing Chasm Between Benchmarks and Real Research

Hamkins' critique arrives as the global mathematical community grapples with AI's potential. While some researchers have reported breakthroughs using AI to tackle problems from collections like the Erdos problems, others urge caution. Notable mathematicians like Terrence Tao have warned that AI can generate proofs that look perfect but contain subtle, critical errors a human reviewer would catch.

This reveals a critical tension in the AI field: impressive performance on standardized tests does not translate to practical utility for domain experts. Hamkins concluded, "As far as mathematical reasoning is concerned, it seems not reliable." His experience serves as a sobering check on the narrative of rapid AI advancement, suggesting that heavy investments in AI reasoning have not yet created a tool that can serve as a genuine research partner for working mathematicians.

Skepticism Amidst Future Promises

Although Hamkins acknowledges that future AI systems might improve, he remains deeply skeptical about current capabilities. His firsthand account underscores a significant gap that persists between marketing claims and on-the-ground application in specialized fields like advanced mathematics. The assessment suggests that for now, true mathematical insight and verification remain firmly in the human domain.